Since its birth several centuries ago, zero has crossed the greatest minds and most complex boundaries from the placeholder to the calculus driver.

Today, zero may be the most omnipresent global symbol known. Anything can be made out of nothing in the tale of Zero. The tale of an idea that took many centuries to create, many nations to cross, and many minds to understand, lies behind this seemingly simple response that conveys nothing.

The foundation of our society today is understanding and working with zero; without zero, we would lack calculation, financial accounting, the ability to construct arithmetic computations quickly, and computers, particularly in today’s connected world. The tale of zero is the tale of a philosophy that has ignited great minds’ imagination across the globe.

If someone thinks of a hundred, two hundred, or seven thousand, the picture is a digit accompanied by a few zeros in his or her head. As a placeholder, the zero functions; that is, three zeroes denote nine thousand instead of just nine hundred.

If we had one zero missings, it would change the number significantly. Just imagine your income having one zero erased. Nevertheless, the number system that we use today – Arabic, although originally from India – is relatively modern. People labeled quantities with various symbols and statistics for centuries. However, it was difficult with these number systems to perform the simplest arithmetic calculations.

For example, the Sumerians were the first to establish a counting system to keep an inventory of their goods – sheep, horses, and donkeys. The Sumerian system was positional; that is, its meaning was denoted by the placement of a certain symbol relative to others. Around 2500 BC and later to the Babylonians in 2000 BC, the Akkadians passed the Sumerian method. The Babylonians first invented a mark to show that a number was missing from a column, just as 0 in 1530 means that there are now hundreds in that number. Though the Babylonian ancestor of zero was a good start, it would still be centuries before the symbol appeared as we know it.

Among the ancient Greeks, the renowned mathematicians who learned the basics of their math from the Egyptians had no name for zero, nor did their method, like the Babylonian, feature a placeholder. They may have deliberated it, but there is no definitive proof that there was even a sign in their language. It was the Indians who, both as a sign and a concept, started to understand zero.

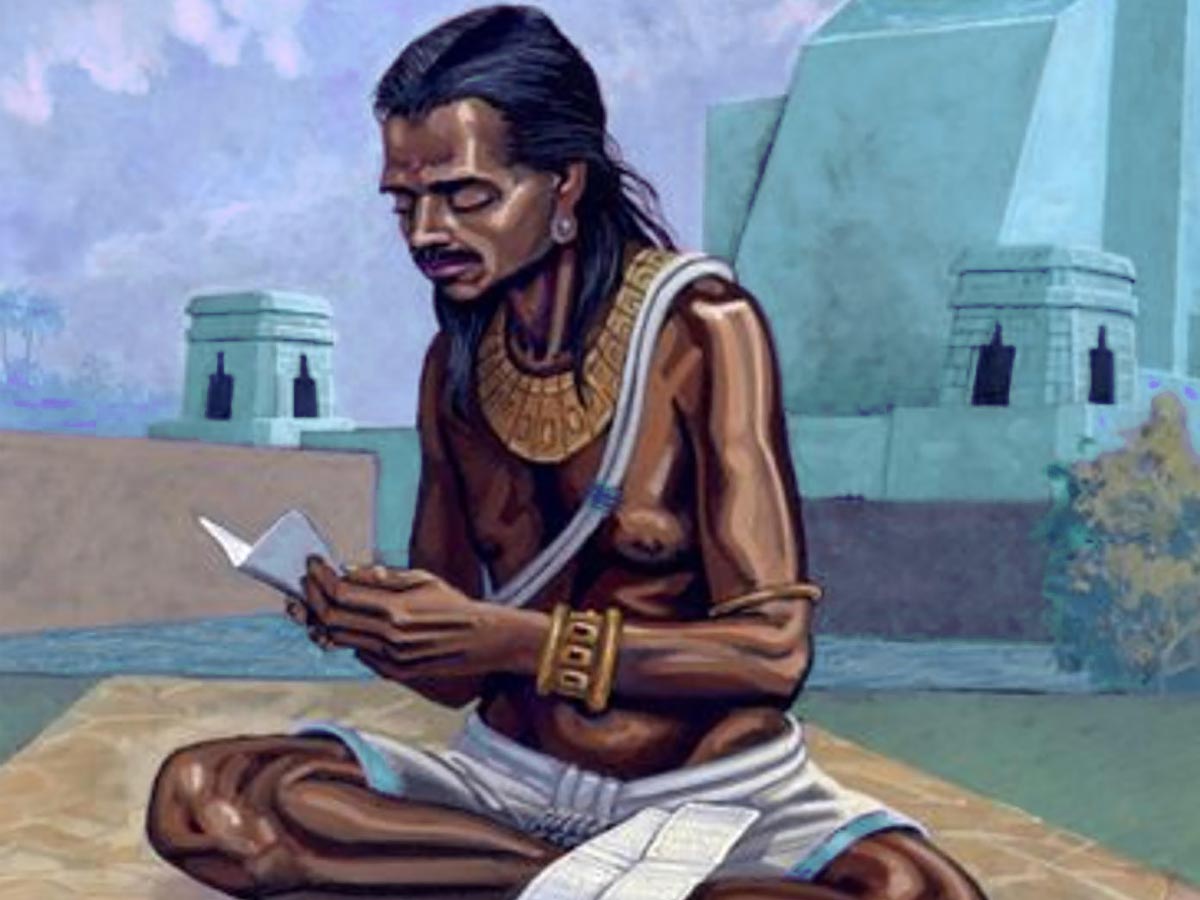

Brahmagupta was the first to formalize arithmetic methods using zero, around 650 AD. To signify a zero, he used dots underneath numbers. These dots were referred to alternately as ‘sunya,’ meaning null, or ‘a’, meaning spot. By addition and subtraction and the outcomes of operations with zero, Brahmagupta wrote standard rules for achieving zero. A division by zero was the only flaw in his law, which would have to wait for Isaac Newton and G.W. Leibniz.

By 773 AD, Zero entered Baghdad and would be established by Arabian mathematicians in the Middle East. They would base their numbers on the Indian method. Mohammed Ibn-Musa al-Khowarizmi was the first to accomplish equations equaling zero in the ninth century or algebra as it has come to be called. He also developed fast methods known as algorithms to multiply and divide numbers (a corruption of his name).

Al-Khwarizmi is called ‘sifr’ zero, from which our cipher derives. By 879 AD, almost as we know it now, zero was written, an oval – but in this case, smaller than the other integers. And, thanks to the Moors’ conquest of Spain, zero had eventually entered Europe; by the middle of the twelfth century, translations of the work of Al-Khowarizmi had weaved their way to England.

“In his book Liber Abaci, or “Abacus book,” in 1202, the Italian mathematician, Fibonacci, built on his work with algorithms. Until that time, the abacus was the most common instrument for arithmetic operations. The evolution of Fibonacci was quickly discovered by Italian merchants and German bankers, particularly by zero. If their assets and liabilities’ positive and negative sums were equal to zero, accountants knew their books were balanced. But because of the comfort with which it was possible to turn one sign into another, governments were still wary of Arabic numerals. While outlawed, merchants continued to use zero in encrypted letters, thus deriving the word cipher from the Arabic sifr, meaning code.

Rene Descartes, the inventor of the Cartesian system of coordinates, was the next great mathematician to use zero. Descartes’ root is (0,0), as someone who has had to graph a triangle or a parabola knows. While zero was now becoming more popular, Newton and Leibniz, the calculus developers, would take the final step in understanding zero.

Relatively simple operations are adding, subtracting, and multiplying by nil. However, even brilliant minds have been puzzled by division by zero. How many times is zero going to go into ten? Or, how many apples that do not exist go into two apples? The answer is indeterminate, but the secret to calculus is to deal with this definition.

You would have to calculate the shift in speed that happens over a fixed period if you wanted to comprehend your speed at a given moment. You might accurately estimate the speed at that moment by making the fixed time smaller and smaller. Consequently, the change in speed to the change in time becomes close to some number over zero as you make the change in time approach zero – the same issue that confused Brahmagupta.

Newton and Leibniz independently solved this problem in the 1600s and opened the universe to enormous possibilities. Acting with numbers as they reach zero, calculus was born without which physics, engineering, and many facets of economics and finance would not be available to us.

Zero is so familiar in the twenty-first century that learning about it feels like a lot of noise about nothing. But it is precisely knowing and dealing with little of this that has enabled the development of civilization. Zero creation through continents, ages, and minds have made it one of humanity’s greatest achievements. Since mathematics is a universal language, and calculus its supreme creation, zero is practiced universally.

But, like its role as a figure and a theory meant to indicate absence, zero may still seem like nothing at all. Since math is a global language, and its crowning accomplishment is measured, zero exists and is used everywhere. But zero can still seem like nothing at all, like its position as a symbol and a term meant to signify absence.